Part 1 — How We Write Tests Now (and Why Review Became the Bottleneck)

The story I keep seeing on real teams :

We merged a “beautiful” new end-to-end suite in one afternoon.

It was a checkout flow—login → add to cart → apply promo → pay → confirmation. Cursor helped a senior engineer draft ~18 Playwright tests off the PRD and a few screenshots. The suite looked polished: tidy page objects, readable steps, a consistent naming scheme. CI was green. Everyone moved on.

A week later, a bug shipped: users could reach the confirmation page even when the backend returned a payment failure. The tests didn’t catch it because the assertions were… vibes. They checked “Thank you” is visible and a URL contains /success. No assertion validated the order state, no network assertion confirmed the API result, no invariant checked the “paid” status.

Cursor didn’t “fail.” It did exactly what we asked: generate plausible tests fast. We failed at the part that now matters most: intent and review.

That’s the new reality: Cursor makes authoring cheap. Confidence is what got expensive.

The core shift: authoring is no longer the constraint

Cursor’s best superpower isn’t autocomplete. It’s turning a vague idea into runnable code—quickly—by pulling context from your repo and applying multi-file edits via its agent/review workflow.

So the constraint moves downstream:

- Review bandwidth: can someone validate intent, assertions, and maintainability at AI speed?

- Test economics: are we generating coverage… or generating maintenance debt?

- Trust calibration: when CI fails, are we debugging the app—or the model’s hypothesis?

(This is why “AI test writing” is not the headline. AI test reviewing is, instead.)

Test Automation Workflow Before vs After Cursor (must-have table)

| SDLC Stage | Before AI IDE | With Cursor | Overhead Reduced | New Overhead Introduced | New Bottleneck |

|---|---|---|---|---|---|

| Story grooming | Test plan written later | Test prompts drafted early | Less rework | Prompt consistency | Prompt quality |

| PR phase | Manual test writing | AI-drafted tests in PR | Boilerplate time | Review + style alignment | Reviewer bandwidth |

| CI debugging | Read logs, re-run | AI helps interpret failures | Debug speed | Incorrect hypotheses | Trust calibration |

| Maintenance | Fix selectors manually | AI refactors + suggests | Faster refactor | “Looks right” errors | Validation effort |

| Backlog refinement | Testability discussed informally | Cursor generates “testability questions” for the story | Fewer late surprises | Noise / too many questions | Signal-to-noise |

| Test data setup | Tribal scripts + ad hoc seeding | Cursor drafts seed helpers + fixtures | Less setup time | Hidden coupling | Data drift |

| Flake triage | Manual pattern matching | Cursor suggests likely causes | Less time-to-triage | Confident-but-wrong root cause | Human verification |

The new authoring loop (Playwright/Cypress): how it actually flows now

Here’s the loop that’s working best on senior teams:

- Write the prompt like a spec, not like a request.

Include user intent, risks, and “what must never happen.” Cursor can only protect the invariants you name. - Generate the test skeletons quickly.

Let Cursor create structure: suites, helpers, shared setup, basic happy paths. - Immediately harden assertions and locators.

This is where senior QE time goes now. For Playwright, lean on web-first assertions and actionability/auto-waiting patterns instead of manual checks that don’t wait reliably.

For Cypress, be intentional with retry-ability and assertion chaining; “it retries” is not a strategy—it’s a mechanism you must design around. - Review diffs like you’re reviewing production code—because you are.

Cursor’s agent review/diff workflow helps, but it doesn’t replace engineering judgment. - Only then scale coverage.

Don’t generate 50 tests before you’ve proven your assertion quality bar on 5.

What got easier (real wins, no hype)

1) Boilerplate becomes background noise

Cursor is excellent at producing the first 70%: scaffolding suites, wiring fixtures, creating page helpers, translating patterns across files—especially when it can “understand” the repo context and apply scoped changes.

2) Migration and rewrites stop being scary

Refactors that used to stall for weeks (renaming locators, splitting helpers, reorganizing specs) can be drafted in minutes—then verified. This changes how aggressively teams can pay down automation debt.

3) Debugging gets faster—if you treat suggestions as hypotheses

Cursor can summarize logs, propose likely causes, and draft targeted diagnostics. The speedup is real, but only if your team has a culture of “verify first.”

4) “Testability” conversations happen earlier

When prompts are drafted during grooming, you surface missing IDs, unclear states, and absent observability before the sprint ends.

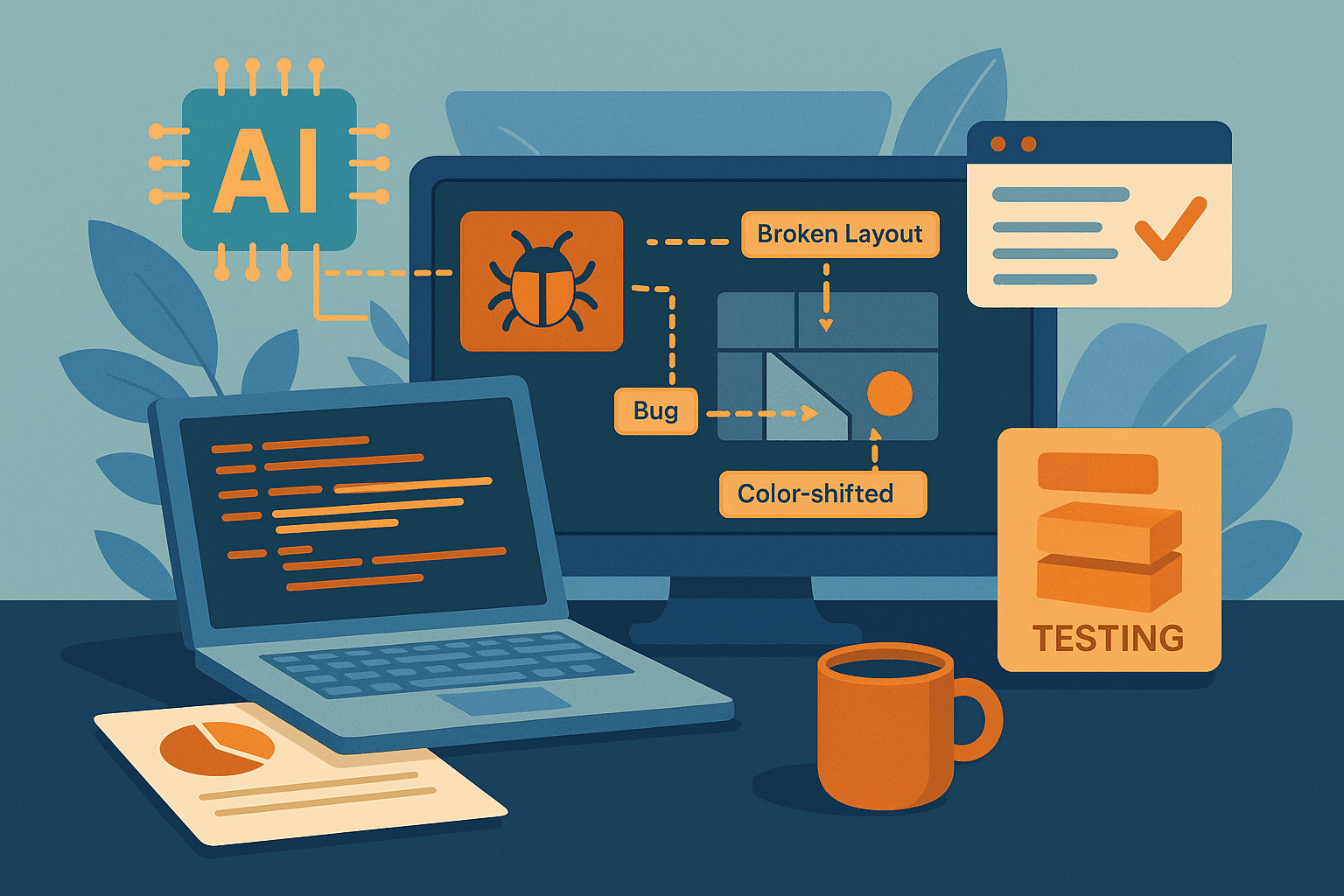

What got harder (the stuff teams underestimate)

1) Review became the bottleneck (and it’s not optional)

AI-generated tests look professional even when they’re logically weak. That’s new. The danger isn’t messy code—it’s credible wrongness.

Your reviewers now need to validate:

- Is the test asserting the right invariant, or just a UI symptom?

- Is it robust to legitimate UI change (copy, layout) without becoming too permissive?

- Is it introducing a maintenance pattern (selector strategy, helper design) you’ll regret?

2) Intent drift: tests that “pass” but don’t protect the business

Cursor will happily confirm a page exists while the transaction failed. This is why teams should bias toward:

- state assertions (order status, entitlement flags)

- network/API assertions (critical calls succeeded)

- invariants (“cannot reach success without payment accepted”)

3) Consistency tax: style, patterns, and “one more helper”

Without guardrails, Cursor increases variance: ten engineers, ten micro-frameworks. Your suite becomes a museum of generated styles.

4) Trust calibration in CI: the confident wrong diagnosis

AI-assisted debugging can lead you straight into rabbit holes. The model is persuasive. Your pipeline must reward verification, not velocity theater.

5) Security / privacy concerns become part of enablement

If you’re in regulated environments, you’ll need explicit policy around indexing, prompts, and data handling. Cursor documents privacy/data governance options (including enterprise controls) and outlines its security approach—use that as your baseline for internal review.

Comparison: Tool tradeoffs that show up specifically in test automation

| Dimension | Cursor advantage | Cursor downside | Practical guidance for QE |

|---|---|---|---|

| Repo-context generation | Strong “codebase understanding” to draft coherent changes across helpers/tests | Can still miss hidden test conventions | Feed it your conventions (lint rules, locator strategy, fixture patterns) as first-class context |

| Multi-file edits | Agent + review workflow makes sweeping refactors feasible | Bigger diffs = harder human validation | Cap generated diffs per PR; require reviewers to run focused smoke checks locally |

| Model choice | Can select among frontier models (useful for different tasks) | Inconsistent output across models | Standardize 1–2 “approved” models per repo + define when to switch |

| Speed | Draft tests fast, iterate fast | Encourages over-generation | Set coverage budgets per story (e.g., 3 critical tests first, then expand) |

| Debug assist | Faster hypotheses | Confident wrong guesses | Treat AI output as “possible causes,” require one concrete reproducer before changing suite |

| Governance | Enterprise privacy/data governance options exist | Policy work is non-trivial | Write a one-page “AI IDE policy” and bake it into onboarding |

Case study: The checkout flow that “passed green” and still shipped a bug

Web app: Subscription checkout (Stripe-like redirect), promo codes, and async order fulfillment.

What Cursor generated (typical):

- Playwright tests that asserted:

- URL includes

/success - “Thank you” text is visible

- Confirmation page renders

- URL includes

What broke in production:

- Backend returned

payment_status=failed, but UI still navigated to confirmation due to a client-side bug. The tests didn’t validate backend state.

How we fixed the suite (the durable pattern):

- Add an assertion that the order API returns

paid=true(or equivalent) before accepting “success.” - Add a negative invariant: if payment fails, the UI must show failure and must not display confirmation.

- Use Playwright’s recommended assertion patterns (auto-retrying assertions) instead of manual checks that can turn into timing hazards.

Result:

- Fewer tests (we cut from 18 to 9), but higher confidence.

- Review time went down because intent was explicit: every test protected a business invariant, not a screen.

Cursor made the first draft cheap. The team made correctness expensive—by choice. That’s the right trade.

Step-by-step adoption playbook (with guardrails that prevent the common failures)

Step 1 — Define “what good looks like” (before anyone generates code)

Guardrails

- A one-page Test Intent Contract:

- Every E2E must assert at least one business invariant

- Every “success” path must include a state confirmation (API, DB via service, or observable event)

- Every test must state the risk it mitigates in a header comment (1 line)

Step 2 — Standardize prompt templates (prompt quality is now SDLC quality)

Guardrails

- Use a repo-stored

/testing/prompts/folder:story_prompt.md(risks + invariants + data needs)playwright_prompt.mdorcypress_prompt.md(locator strategy, fixtures, conventions)

- Require prompts in grooming for “tier-1” stories (checkout, auth, billing)

Step 3 — Start with a “5-test pilot” (prove review mechanics before scaling)

Guardrails

- Limit PRs to:

- max 300 lines of generated test code per PR

- max 2 new helpers per PR

- Require:

- reviewer runs targeted subset locally

- CI includes trace/video on failure (don’t debug blind)

Step 4 — Create an AI-aware review checklist (this is the new bottleneck)

Guardrails

- Review checklist must include:

- Assertions validate state, not just presence

- Locators are resilient (role/text strategy where possible; avoid brittle CSS chains)

- No “magic waits”

- Negative paths exist for critical flows (failure must be observable)

Step 5 — Instrument the economics (measure what Cursor changes)

Guardrails

Track:

- authoring time saved (easy)

- review time added (usually hidden)

- flake rate trend

- “escaped defects covered by automation” trend

After all, though Cursor improves authoring velocity inside the IDE, teams still need a system to understand suite health, flake clustering, failure forensics, coverage vs. risk, and release readiness signals across environments. That’s where an AI-driven testing service and platform like Omniit.ai becomes the stabilizer— we know quality engineering inside out and turn “more tests” into “more confidence” with actionable quality intelligence.