When I read articles claiming that “QA will be the last job standing in the age of AI,” I nod, not because I believe QA is magically immune to automation, but because I believe proper QA has always been a safeguard. The reality is not that AI saves QA; it highlights how many organizations never built QA as a foundational part of their SDLC. In many cases, what’s been compromised wasn’t QA. It was the process. What AI does is shine a light on that compromise.

The Real Root Cause — Not AI, but Quality-Compromised Processes

Too often, the real bottleneck isn’t AI; it’s that QA was never truly integrated into the development lifecycle. Instead of being a built-in discipline from the start, testing is often treated as a final gate — a phase where we scramble to catch everything before release. That results in risk, rework, delays — and sometimes catastrophic failures post-release.

Does “shift left” sound formiliar? Decades of best practices tell us that the earlier defects are found, the cheaper and easier they are to fix. For instance, studies show that defects identified during design or early planning cost dramatically less to address than those found during testing or after release. Fixing a bug during implementation may cost roughly six times more than if caught at design — and once in production, the cost can balloon by orders of magnitude.

Embedding QA early, meaning, at the design, requirements, even architectural review phase, offers far better ROI. (Medium)

By contrast, when QA is relegated to the end, teams end up firefighting: last-minute bug hunts/bashs, rushed patches, high-maintenance code, reactive cycles, and in the end – a product that may barely stand up to real-world use. When AI accelerates development, that flawed process only accelerates failure.

AI — Not a Silver Bullet, But a Force Multiplier for Quality

AI isn’t what made QA critical again. But AI does make teams more vulnerable. This “extended” vulnerability calls for more rigorous quality disciplines. If we use AI wrongly, we’ll ship more code, more features, more quickly — but also with more risk.

On the flip side, AI gives us powerful opportunities to build better QC/QE processes: to really “shift left”, scale testing, and catch issues early.

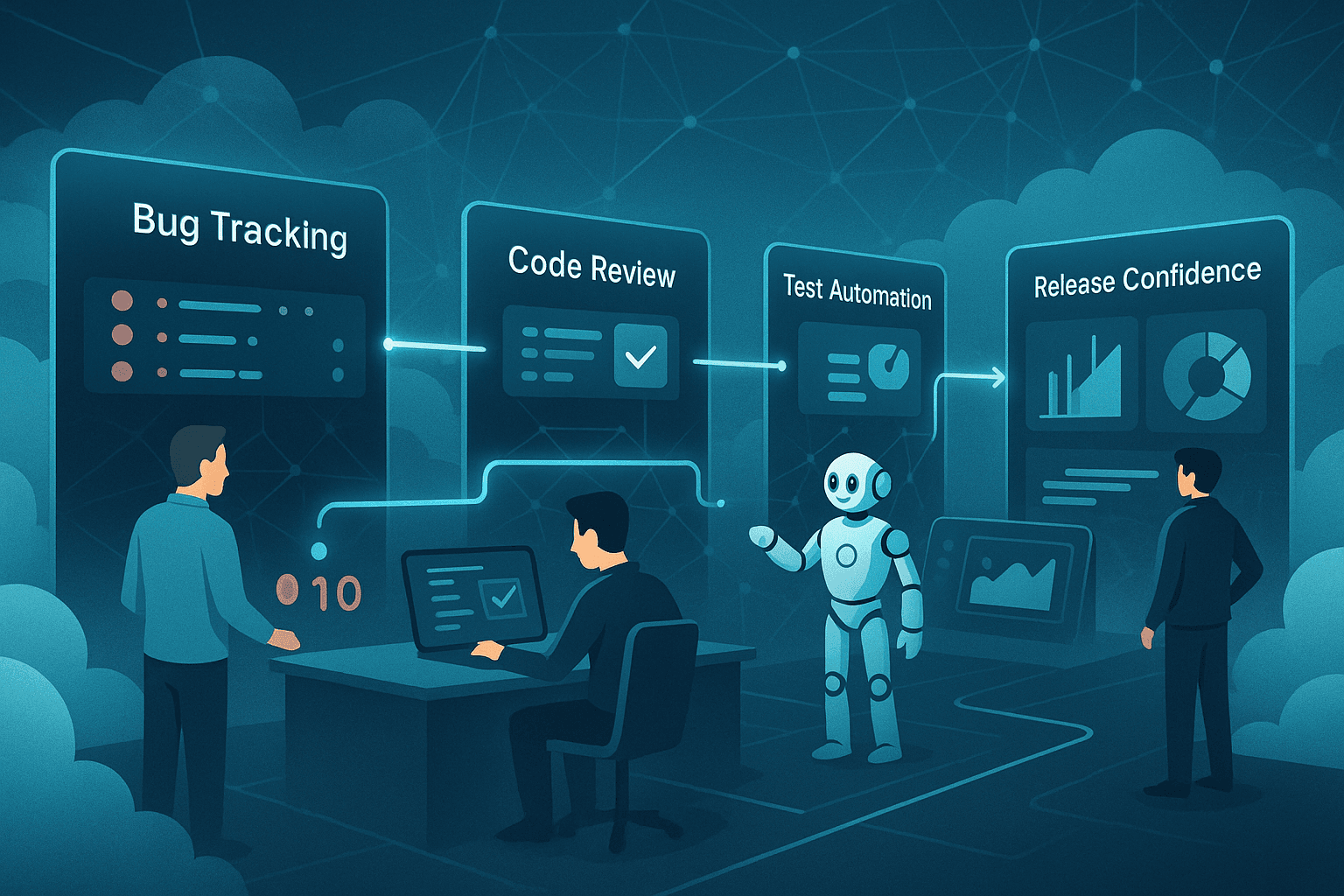

- Automated test generation & data preparation at scale

With generative AI, we can create test scaffolding, edge-case scenarios, and realistic test data rapidly — far beyond what manual QA could cover in the same time. This enables broad coverage early in the lifecycle and frees human QE resources for higher-value tasks. - Risk-based testing & defect prediction

AI can help analyze code churn, historical defect patterns, change frequency — and flag modules or features that look risky. That lets QA focus strategically, not exhaustively, increasing impact per effort. Risk-based testing has long been advocated as an efficient way to optimize QA effort and cost versus benefit. - Continuous feedback loops integrated with CI/CD

In a world where AI can generate code rapidly, integrating automated tests — static analysis, unit tests, integration tests — into CI pipelines catches many issues long before code reaches QA or production. That dramatically reduces rework, lowers risk, and preserves delivery velocity while improving quality.

None of these replaces the need for human judgment though. AI or traditional automation in general cannot:

- Flag ambiguous business logic or semantic correctness

- Challenge assumptions, oracles, edge-case relevance

- Evaluate design choices against long-term maintainability, architecture constraints, compliance or security requirements

- Serve as the guardians of trust, context, and risk awareness that only experienced QE professionals can deliver

In short: AI becomes a force multiplier. But human oversight, leadership, and a disciplined process remain indispensable.

From Bug-Hunter to AI-Empowered QE Leader: A Path Forward

So, if you lead a QA or QE team, or want to shape one for the future, here’s a roadmap to stay relevant, impactful, and indispensable in the AI era:

1. Re-embed QA throughout the SDLC — shift-left, early and often

Make QA involvement non-negotiable from the earliest stages: requirements definitions, architecture reviews, design discussions, before a single line of code is written. Early QA participation reduces costly rework and finds structural issues before they become code-level problems. (Medium)

Static reviews (spec reviews, architectural inspections, peer reviews) — akin to early “inspections” — can catch issues far upstream when they’re cheapest to fix. This is not just “nice to have”; it’s risk management. (Wikipedia)

2. Combine AI-driven automation with human-led risk and impact analysis

Use AI to scale test generation, flag high-risk modules, and surface likely defect zones — but let human QE leads, architects, and stakeholders layer in context: business impact, usage patterns, compliance, maintainability, user experience. This hybrid model gives you both coverage and wisdom.

Adopt risk-based testing to prioritize efforts based on likely impact rather than trying to test everything exhaustively.

3. Shift the QA value metric — from “bugs found” to “risk prevented / trust delivered”

If QA is still evaluated on number of bugs caught or test coverage percentages — you’re stuck in old-school thinking. Instead, measure defect-escape rate, incidents detected after release, time to detect/fix issues, business impact avoided, reliability, compliance metrics, user satisfaction. That reframes QA not as a cost center, but as a risk mitigator and business enabler.

4. Treat AI-generated code as “drafts,” not “finished goods”

Code produced or heavily aided by AI should still pass through the same rigorous quality checkpoint processes: code reviews, static analysis, unit/integration tests, security scans, and human semantic review. AI’s speed is a benefit — but without discipline, it also becomes a risk multiplier.

5. Build a culture of quality: education, collaboration, responsibility

Transform teams’ mindset from “I write code, testers catch bugs” to “we build quality together.” Encourage developers, QAs, and business stakeholders to collaborate early, own quality, and understand tradeoffs. Invest in training, automation, tooling. At the end of the day, quality isn’t a department — it’s a culture.

Danger of Ignoring the SDLC Bottleneck — AI Might Make Things Worse

If teams treat AI as a panacea and continue with flawed processes, the consequences could be amplified:

- Faster code generation → more surface area → more potential defects

- Lack of early QA involvement → defects propagate deeper into architecture, making them harder and costlier to fix

- Overloaded QA teams drowning under volume — leading to burnout, shortcuts, or superficial testing

- Technical debt buildup — with brittle, hard-to-maintain, insecure code becoming standard

Worst of all: you may not realize the damage until it’s too late — production incidents, security breaches, systemic failures, reputational losses. Given the scale of modern software impact, this isn’t a hypothetical. The cost of poorly built software isn’t just development delay — it’s business risk. (Forbes)

What This Means for QE-Forward Organization

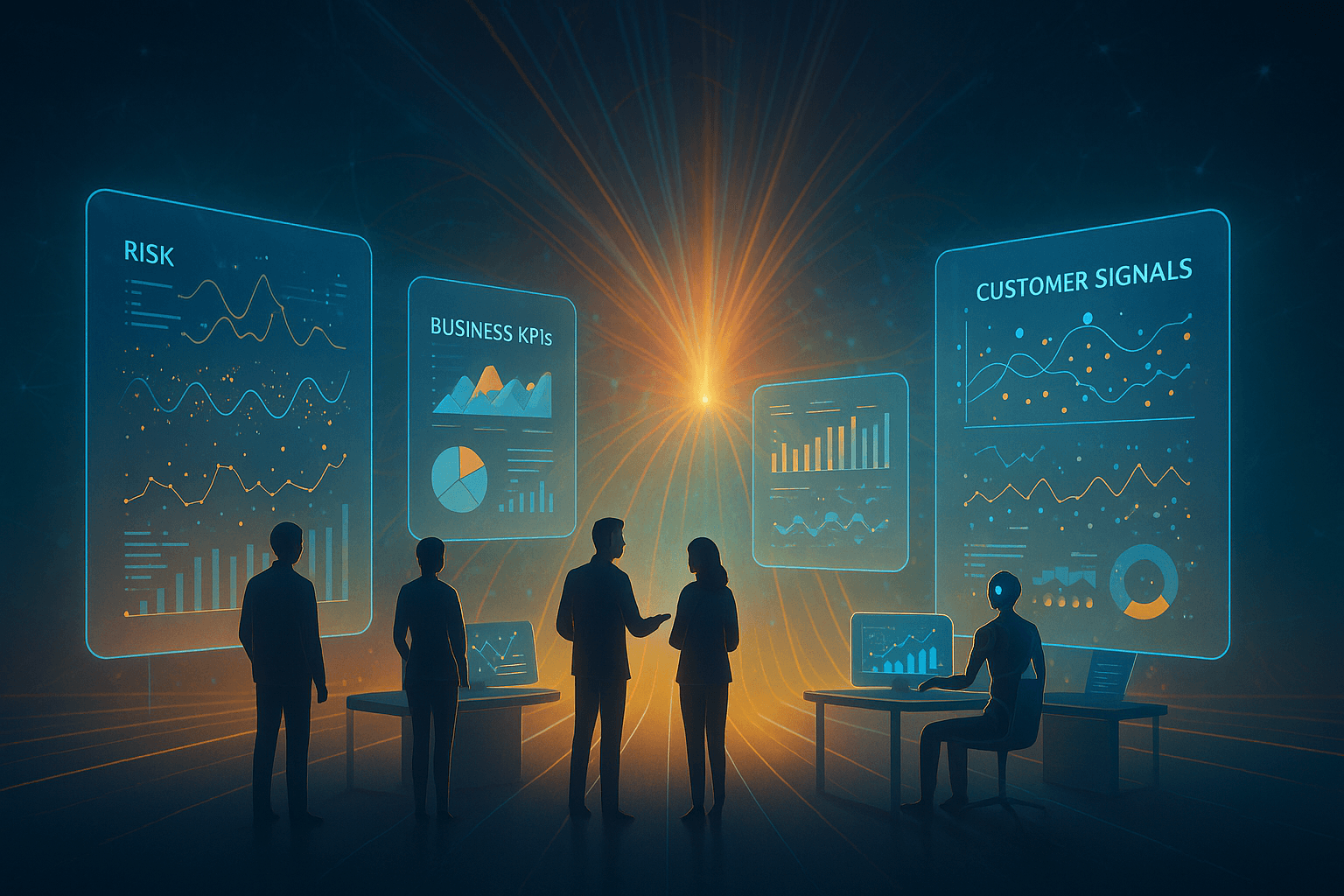

At Omniit.ai, a QE founded business, what we’re building is not just automate tests — it enables quality-first culture. Our platform helps teams:

- Integrate QA from the very start — so quality is baked in, not bolted on

- Prioritize risk — allowing smarter allocation of QA effort where it matters

- Automate repetitive tasks — freeing human QE professionals to focus on context, design, and judgment

- Provide dashboards — linking test outcomes, coverage, risk metrics, business KPIs — to help leadership appreciate QA as strategic rather than cost

With such a platform, Quality organizations can move from reactive bug-fixing to proactive quality engineering, from firefighting to predictable, reliable releases. That’s where QA becomes not just necessary — but a competitive differentiator, a trust enabler, and a foundation for responsible, scalable AI-augmented development.

Final Thoughts: QA Isn’t the Last Job Standing — It’s the First Line of Trust

AI won’t magically eliminate bugs, nor will it guarantee perfect software. What it does do is make code production faster, broader, and more volatile. In that landscape, QA doesn’t just survive — it becomes essential. But only if teams treat quality with the seriousness it deserves.

If we shift QA left, embed it in every phase, combine smart automation with human judgment, and measure value in risk prevented — then QA doesn’t have to be the last job standing. It becomes the first line of defense. The central guardrail that ensures software is not just built — but built right.

References and Further Reading

- Integrating Early QA in SDLC: Costs vs. Benefits — BetterQA (2024) (BetterQA)

- Why QA Should Be Involved Early in the Software Development Lifecycle — Medium / TestWithBlake (2025) (Medium)

- Shift-Left Testing and its Benefits for Modern Development — QATouch / general QA blogs (2025) (qatouch.com)

- Quality Assurance in Software Development Lifecycle: Strategic Approach — Selementrix (2025) (selementrix.ch)

- Early Testing & Static Testing Benefits — TestFort (2024) (TestFort)

- Risk-Based Testing Approaches & Metrics — IJCA/Risk-Based Testing Survey (2019) (IJCA)

- Software Quality Assurance: Best Practices & Key Benefits — QualityZe (2025) (qualityze.com)

- Development Testing and Code Integrity Practices — Wikipedia / Dev-testing overview (2025) (Wikipedia)

- Costs of Poor Software Quality — Forbes / CISQ estimate (2023) (Forbes)