Latest Blog Posts

Discover insights on AI testing, quality engineering, and automation

Try searching for topics like “agentic AI”, “testing”, or “quality engineering”

Is Your AI Biased? How to Test for Fairness in 2026 with Sample

In the world of finance, a biased AI isn’t just a technical flaw, it’s a business risk with real regulatory and reputational stakes. As a seasoned QA leader, I’ve seen our industry evolve: in 2026, ensuring fairness in AI is as critical as validating accuracy or uptime. Senior engineers and quality professionals can no longer […]

Guide to Selecting AI-Powered Testing Tools for Modern Automation Frameworks

AI-powered test automation isn’t the problem. Choosing the right way to adopt it is. Engineering leaders are flooded with AI testing platforms promising speed, intelligence, and lower cost — yet many teams still struggle to turn those promises into real production impact. The challenge isn’t capability. It’s decision timing and fit. There is no universal […]

Meaningful QA/QE in 2026: Why LLM Evaluation Literacy Is Now a Core Skill (and How to Build It)

Here is the uncomfortable truth: your “green CI” doesn’t mean your AI feature is safe to ship! If you’ve ever shipped a release where automated tests were green… and production still lit up, you already understand the heart of the LLM era: That’s why LLM evaluation isn’t an “ML team thing.” It’s the next evolution […]

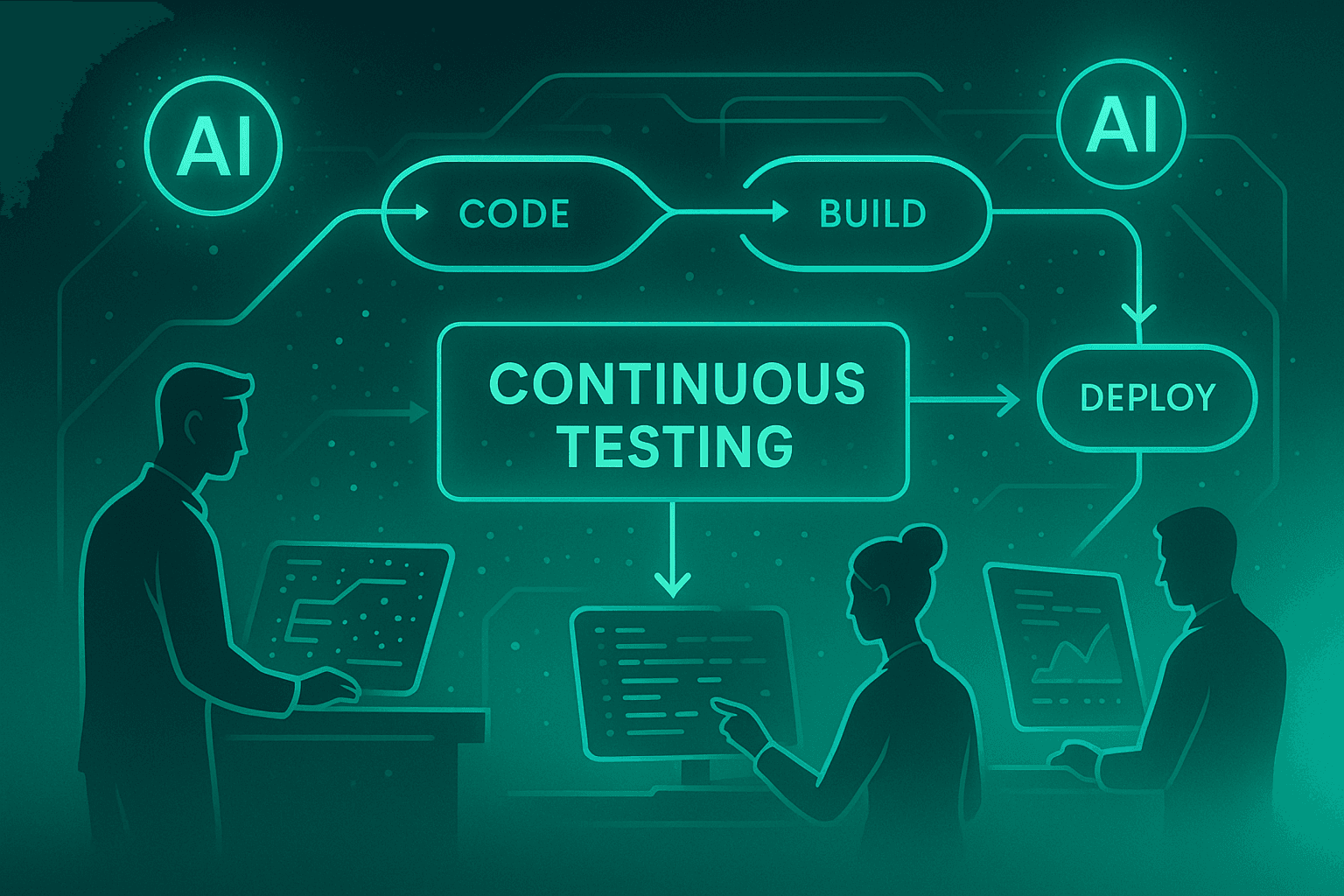

QAOps and Continuous Testing: Embedding Quality in CI/CD with AI

Software teams love to talk about speed, but nobody wants to talk about the cost of going fast. Releases pile up, pipelines grow increasingly complex, and quality becomes a silent tax—felt only when production issues erupt or customer trust slips away. That’s exactly why QAOps has surged to the forefront. It reflects a shift from […]

QA First — Not Last: Why AI Should Spark a Return to Quality-Focused SDLC, Not a “Ship and Hope” Culture

When I read articles claiming that “QA will be the last job standing in the age of AI,” I nod, not because I believe QA is magically immune to automation, but because I believe proper QA has always been a safeguard. The reality is not that AI saves QA; it highlights how many organizations never […]

Defining and Monitoring Quality Metrics in an Agentic RAG QE System

It is easy to fall in love with shiny AI testing dashboards. They glow with promise: self-healing tests, intelligent agents, adaptive pipelines. But the fundamental question we need an answer is: Is all this intelligence actually making quality better? That’s the question I had the first time our RAG-based test agents started generating their own […]