Software teams love to talk about speed, but nobody wants to talk about the cost of going fast. Releases pile up, pipelines grow increasingly complex, and quality becomes a silent tax—felt only when production issues erupt or customer trust slips away.

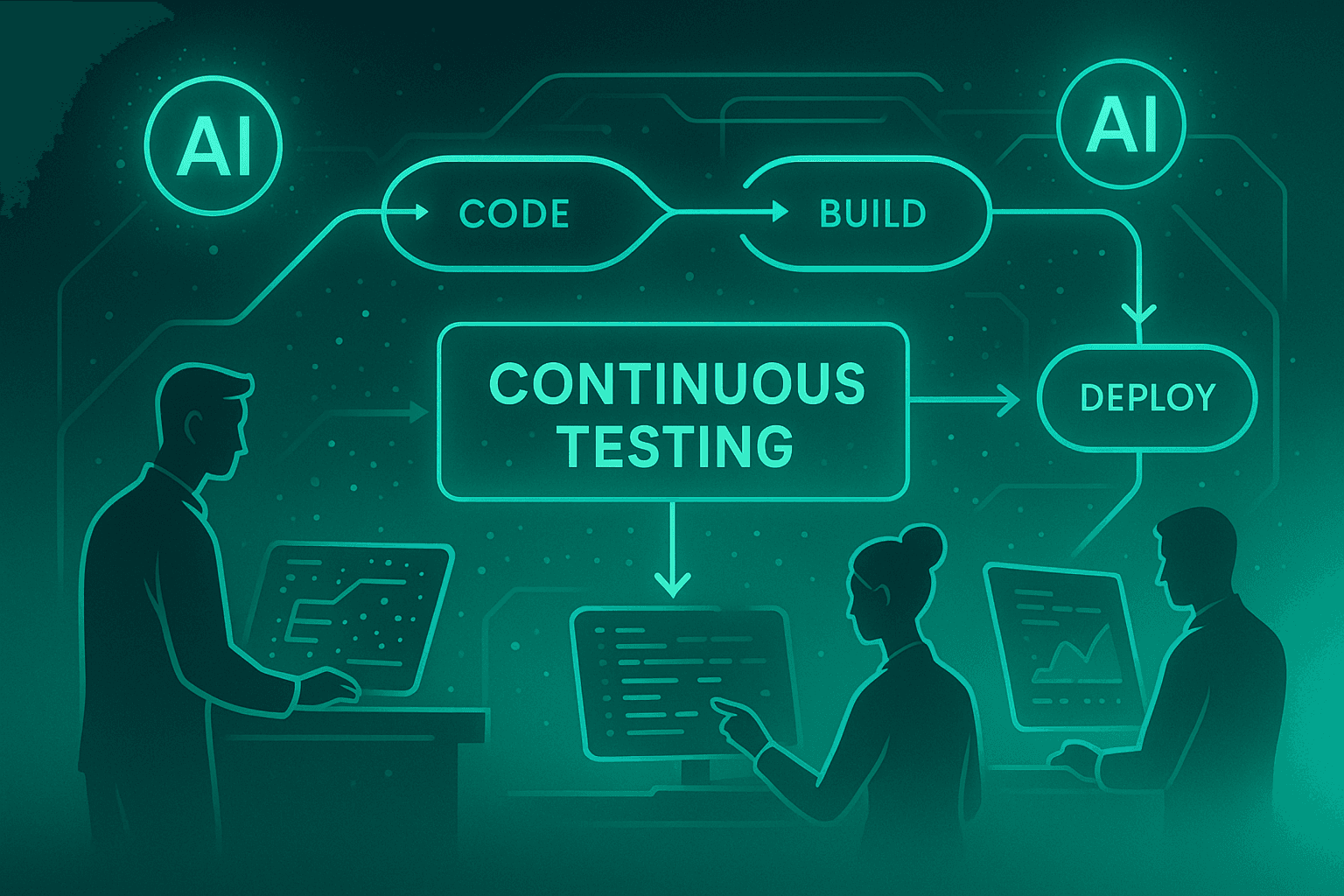

That’s exactly why QAOps has surged to the forefront. It reflects a shift from “testing as a gate” to quality as a real-time, AI-assisted service baked into every stage of CI/CD. Continuous Testing is no longer a buzzword; it’s the scaffolding that keeps modern software systems stable as they race ahead.

And with AI reshaping engineering workflows, QAOps is transforming from operational plumbing into a strategic advantage—one where platforms like Omniit.ai equip teams with autonomous test generation, intelligent validation, and agent-powered test execution at scale.

This article explores how QAOps & AI-powered Continuous Testing are redefining the DevOps quality ecosystem—and how teams can embed quality into every commit, build, and deployment without slowing down.

What QAOps Really Means (Beyond the Buzzwords)

If you’ve ever watched a CI pipeline churn during a busy release, you’ve felt that quiet tension: everyone hopes automation catches what matters, but nobody is completely sure. QAOps exists to erase that uncertainty.

It’s no longer:

“QE tests after development finishes.”

but rather:

“Quality is continuously evaluated as software flows through the system.”

Instead of QA being an external checkpoint, QAOps embeds quality signals directly into the pipeline’s bloodstream. Every commit, PR, environment change, and deployment becomes a quality event, observed and interpreted by AI-driven validation agents.

(This is actually where Omniit.ai comes in—we are turning QAOps from theory into operational reality.)

Continuous Testing Needs AI to Truly Work (Here is Why)

Continuous Testing only shines when tests evolve as quickly as the product does. That’s where traditional automation falls apart. Humans simply can’t keep up—not with selector changes, API schema drift, microservice dependencies, or daily merges.

AI steps in where speed and complexity exceed human bandwidth.

To make this more concrete, here’s a focused comparison:

How AI Changes Continuous Testing

| Without AI | With AI in QAOps |

|---|---|

| Test scripts constantly break after UI/API changes. | Self-healing selectors + schema-aware validation keep tests stable. |

| Developers wait hours for regression cycles. | Real-time test generation + instant diff reasoning in PRs. |

| QE teams manually triage endless failures. | AI clusters failures by semantic meaning & root cause. |

| Quality gates feel rigid or arbitrary. | AI applies adaptive, risk-based gating rules. |

| Tests cover what’s easy—not what’s risky. | AI generates tests strategically based on change impact. |

You can see why companies adopting AI-first pipelines report massive increases in stability and QE productivity.

AI Generates Tests at the Speed of Code

Instead of QE teams scrambling after each feature merge, AI agents now act like hyper-fast junior testers:

They read new code, inspect updated components, and instantly produce tests that align with:

- API contracts

- DOM structures

- User workflows

- Edge-case patterns from historical bugs

It’s not replacing expertise—it’s amplifying it. QE architects finally get breathing room to focus on strategy and governance instead of endless catch-up work.

AI Keeps Tests Healthy Through Self-Healing

Here’s the part every automation engineer dreams about: tests that repair themselves.

In reality, AI does more validation than blind fixing. For example:

- If a selector changes, the AI checks multiple cues before healing.

- If an API returns a modified schema, the AI flags contextual differences.

- If a flow breaks, the AI correlates it to code changes instead of marking it flaky.

This creates an experience that feels more like working with a thoughtful coworker, not a brittle automation engine.

AI Turns Failure Noise Into Actionable Insight

Consider the classic CI meltdown: 42 tests fail, Slack melts down, and everyone blames everyone else.

AI cuts through the chaos by grouping failures semantically. Example:

“These 17 tests failed because checkout-service version 6.1 introduced a currency format change.”

Exactly the kind of insight QE engineers wish pipelines would give them—but never did.

How QAOps Fits Into CI/CD: A Modern Blueprint

Instead of describing pipeline steps in bullet points, it’s far more intuitive to visualize how quality flows through a pipeline. When AI is embedded, quality doesn’t just appear at checkpoints; it moves through CI/CD loop, shaping decisions at every turn:

From a human’s eye:

1. Everything starts with an event

- A PR opens.

- A feature flag toggles.

- A microservice is redeployed.

- A dataset refreshes.

In QAOps, these become quality triggers—not afterthoughts.

2. Distributed execution eliminates bottlenecks

Tests run across cloud runners so the pipeline stays fast—even under heavy regression load.

3. Quality gates become intelligent

Not all failures should block a release. AI evaluates:

- historical behavior

- code dependencies

- risk zones

- confidence scores

This prevents false stops without decreasing safety.

4. AI agents validate everything from functionality to semantics

Agents don’t just check assertions—they “reason” through context:

- Does the UI still express the right meaning?

- Do logs reflect expected behavior?

- Does this API response violate known business rules?

5. Observability binds the entire system together

dashboards → trends → drift → release risk → reliability

This transforms quality from a reactive function into a data system.

How Engineering Teams Transform When QAOps + AI Land

This transformation isn’t theoretical—it’s cultural and operational. Let’s step into the day-to-day of a team that has embraced QAOps with AI.

- Imagine code merges without fear.

- Imagine developers getting precise feedback in real time.

- Imagine QE engineers finally stepping into the strategic leadership role they were meant to have.

Here is how we narrate these shifts in a way that resonates.

Shift 1 — QE Becomes the Brain, Not the Bottleneck

With AI taking over repetitive validation, QE architects move into higher-order work:

- designing quality governance

- shaping risk models

- training AI validators

- refining domain rules

- monitoring drift and systemic patterns

Quality becomes a strategy, not a task.

Shift 2 — Developers Get Feedback With Context, Not Confusion

Gone are the days of cryptic failures:

“Assertion error at line 237.”

Now developers get:

“Checkout flow failed because your PR introduced a currency formatting inconsistency with migration #119.”

This kind of insight changes engineering culture.

Shift 3 — Releases Stop Feeling Like Cliff Jumps

The fear goes away. Teams trust their pipelines again.

Hotfixes slow down.

Rollbacks become rare.

Executives gain confidence in engineering velocity.

Reliability becomes predictable.

This is the quiet miracle of QAOps.

Shift 4 — Quality Becomes Measurable, Explainable, and Visible

Finally, QE leaders can present real metrics that matter to business:

And leadership listens: hidden ROI of testing

Pitfalls You Can Avoid (Because Every Team Hits These at Beginning)

Teams often underestimate the cultural shifts required.

Here are some common pitfalls and the reality behind each one of them:

| Pitfall | Reality Behind the Pitfall (Humanized Explanation) |

|---|---|

| AI Isn’t a Magic Wand | Teams often assume AI will automatically “fix testing.” In practice, AI amplifies existing strategy—if your test architecture is weak, AI accelerates the chaos. Strong foundations are still required. |

| Self-Healing Needs Governance | Self-healing works beautifully until it silently “heals” something that should not be healed. Without QE oversight, you risk masking real bugs, drifting expected results, or normalizing regressions. |

| QE Siloing Breaks QAOps | QAOps only works when Dev, QA, and DevOps collaborate in one continuous loop. If QE is isolated, AI signals don’t reach developers, and pipeline insights never become engineering decisions. |

| Lack of Observability Makes Everything Fragile | Without logs, traces, metrics, and dashboards, AI can’t reason about failures, drift, or risk. The result is noisy automation, unclear ownership, and unstable pipelines. Observability is the foundation AI stands on. |

Knowing these ahead of time ensures a smoother QAOps journey.

Omniit.ai platform isn’t just a test runner—it’s an AI-first Quality Engineering platform designed specifically for QAOps workflows.

A Realistic Roadmap for Teams Ready to Adopt QAOps (Starting This Quarter)

Teams often ask, “Where do we actually begin?” As a quick sample, here is a four-phase maturity model that maps practically and directly into real pipeline evolution:

Phase 1 — Lighten Automation With AI

AI-generated tests, smarter failure grouping, and self-healing.

Phase 2 — Modernize CI/CD for QAOps

Event triggers, ephemeral environments, adaptive quality gates.

Phase 3 — Introduce Agentic Validation

AI agents validate behavior and semantics, not just assertions.

Phase 4 — Build End-to-End Quality Observability

Drift mapping, release risk analysis, stability metrics.

When teams complete this roadmap, release risk drops, productivity rises, and quality becomes a shared, measurable responsibility.

Conclusion: QAOps Isn’t About Testing Faster—It’s About Building With Confidence

DevOps accelerated delivery.

QAOps ensures we don’t fall apart while accelerating.

AI elevates quality from a stage to a living, intelligent function.

And Omniit.ai turns that intelligence into a practical, scalable, deeply integrated reality.

The future isn’t more tests. It is smarter validation woven into every build, every commit, every deployment. With QAOps, teams stop hoping quality will hold—and start knowing it will.